You launch your shiny new app on Kubernetes. Everything’s humming along nicely, users are happy, and you start to relax. Then out of nowhere, traffic spikes. Pages crawl, users complain, requests start failing. Panic mode: you scramble to add more servers. The storm passes, your app recovers, and now you’re stuck paying for all those extra machines that barely do anything once traffic calms down.

If that story feels a little too familiar, welcome to the age-old cloud problem:

Overprovision, and you burn money.

Underprovision, and your users pay the price.

The good news? Kubernetes actually has a built-in way to save you from this mess: autoscaling. With it, your cluster grows when you need extra muscle and shrinks when you don’t. No babysitting, no 2 a.m. firefighting.

In this post, I’ll walk you through the two main tools that make this happen: the trusty old Cluster Autoscaler and the newer, leaner Karpenter.

Scaling in Kubernetes: A Quick Analogy

Before diving into nodes and Pods, let’s simplify. Think of Kubernetes like running a grocery store.

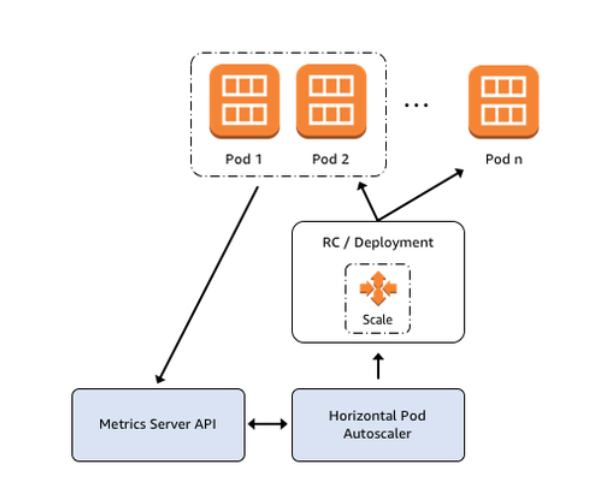

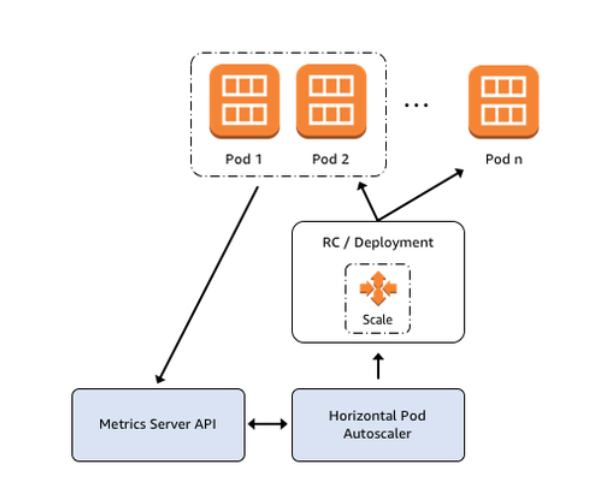

- Horizontal Pod Autoscaler (HPA): When checkout lines get long, you don’t build another store—you just open more registers. That’s what HPA does: spin up extra Pods (like cashiers) to handle the rush.

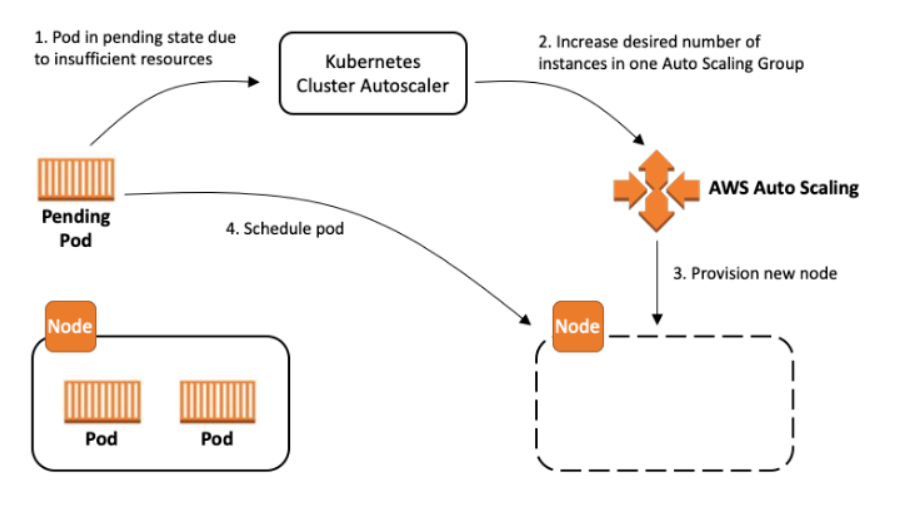

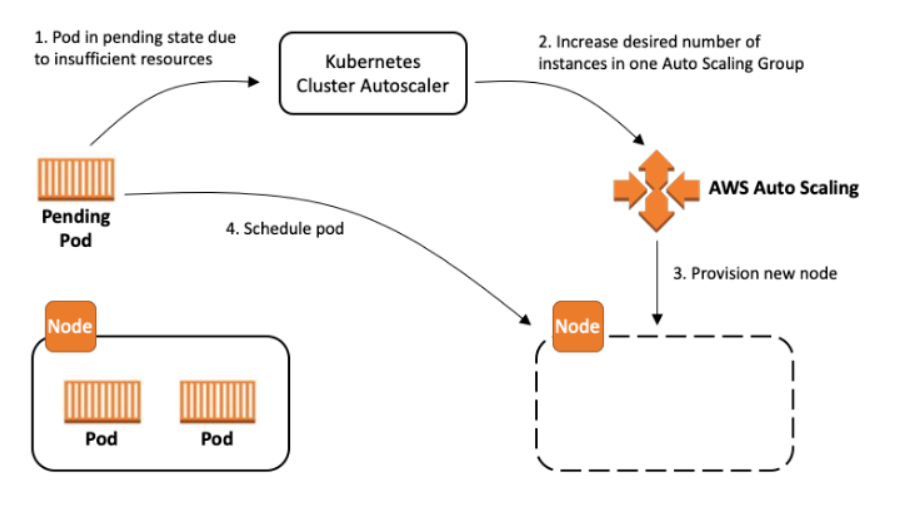

- Cluster Autoscaler: But what if every register is already open and the store itself is packed? At that point, you need to expand the store—literally add another wing. That’s cluster autoscaling, and it happens by adding more Nodes.

This guide focuses on that second part: scaling your infrastructure.

The Veteran: Cluster Autoscaler

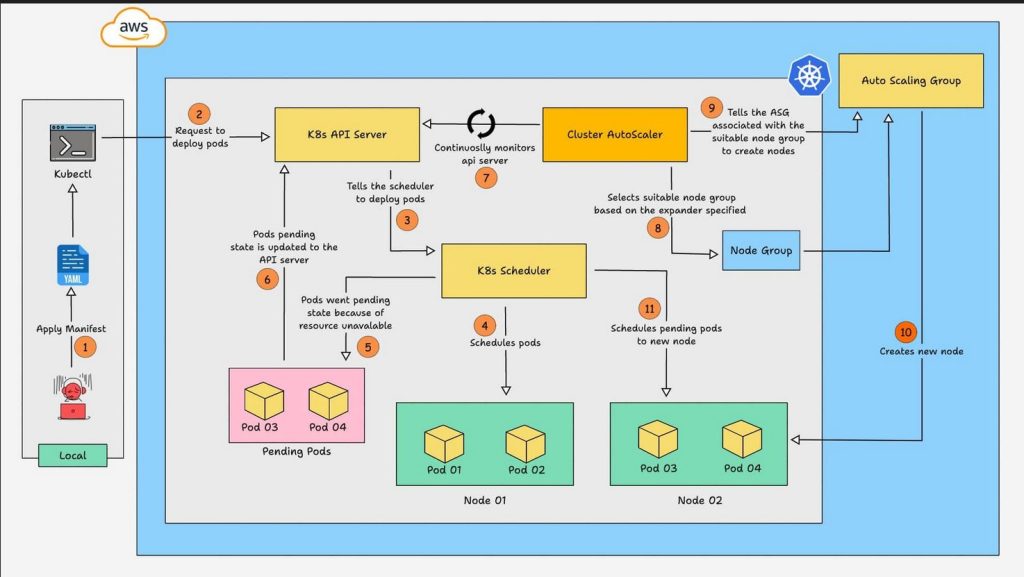

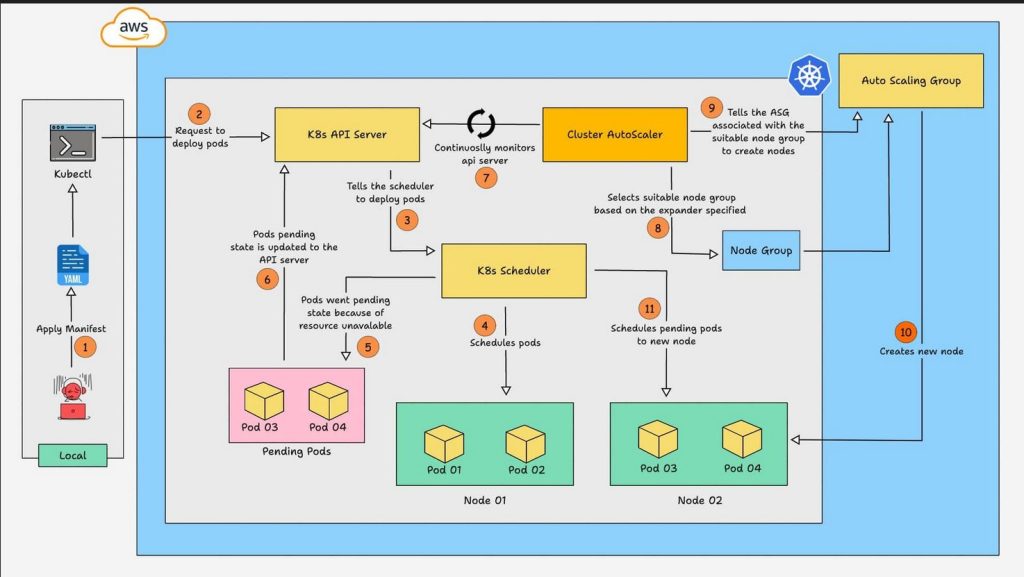

Cluster Autoscaler (CA) has been around long enough to earn the “default” badge. It’s supported by all the big clouds (AWS, Azure, GCP) and is considered the safe choice.

Here’s the gist: you define a group of servers, say between 2 and 10. CA keeps an eye on your Pods. If there isn’t enough room, it asks your cloud provider to spin up another server from that group. Later, if traffic drops and machines sit idle, CA removes them.

Why folks stick with it: it’s stable, predictable, and works everywhere.

But let’s be real—CA has its limits:

- You’re tied to the instance types you defined in advance. Need GPUs? You’ll have to set up a special group just for that.

- It’s not exactly speedy—new nodes can take a few minutes to appear.

- Sometimes it wastes money, like spinning up a massive server for one tiny Pod just because that’s the only option available.

The Challenger: Karpenter

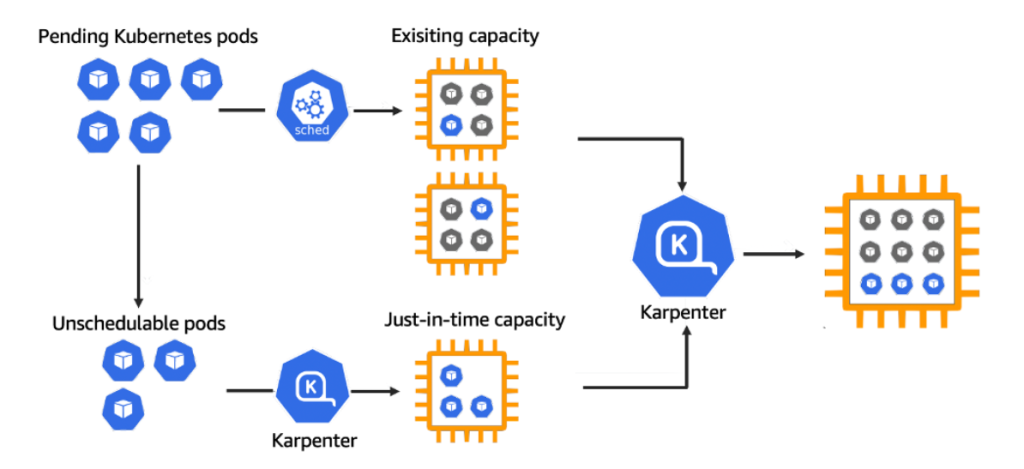

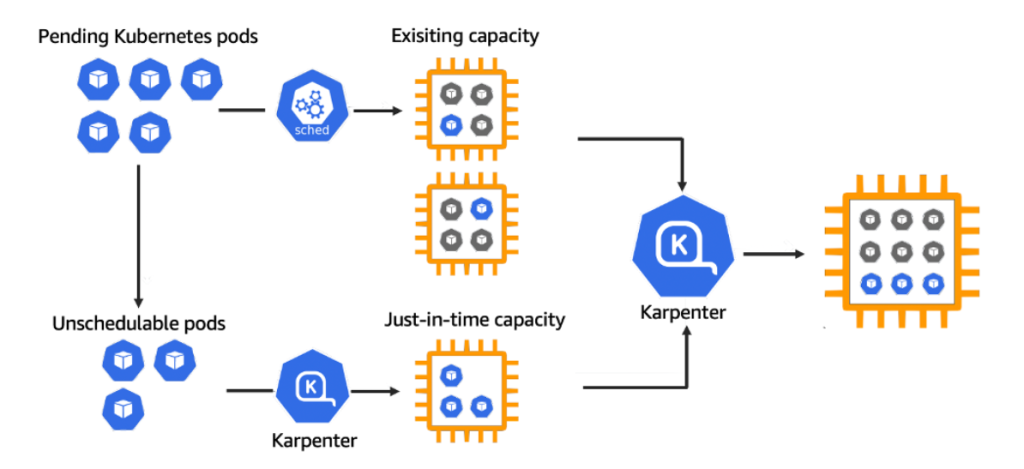

Karpenter, is AWS’s answer to faster, smarter autoscaling. Instead of juggling fixed node groups, Karpenter looks at what your Pods actually need and brings up the perfect machine on the fly.

With Cluster Autoscaler, the message is: “Here’s a pool of 10 servers—use them wisely.”

With Karpenter, it’s more like: “Tell me what you need, and I’ll go grab the cheapest machine that fits—right now.”

Here’s how it plays out:

- A Pod can’t find room and goes Pending.

- Karpenter spots it instantly.

- It checks the Pod’s specs—CPU, memory, GPUs, architecture, even preferred zones.

- Then it calls AWS directly: “Spin up the cheapest instance that matches this.”

- Seconds later, the Pod is running.

When traffic dips, Karpenter doesn’t just leave machines running. It consolidates workloads, empties unused nodes, and shuts them down. You can even set expiration timers so nodes refresh automatically—handy for cost control and security patches.

Why teams love it: it’s quick, efficient, and not boxed in by rigid groups. Extra features like consolidation and TTL are icing on the cake.

Where it still needs time: it’s newer, AWS-first, and the setup is a bit more involved compared to CA.

So, How Do They Compare?

Both tools are solving the same puzzle—keeping your cluster from being too big or too small—but they approach it differently.

Cluster Autoscaler is the old reliable workhorse. It does what you tell it, manages predefined groups, and scales when workloads overflow. It’s predictable and multi-cloud, but a little rigid and sometimes wasteful.

Karpenter is more like a smart assistant. It listens to your workloads, finds the best match, and spins up exactly what you need in seconds. It’s faster, usually cheaper, and more flexible—but it shines brightest on AWS and is still maturing elsewhere.

Which One’s Right for You?

You need Cluster Autoscaler if:

- Stability and maturity matter most.

- You’re working across multiple clouds.

- Your workloads are steady and you’re fine predefining node groups.

Choose Karpenter if:

- You’re on AWS.

- Speed and cost savings are priority.

- Your workloads are diverse and dynamic (web apps, ML jobs, batch jobs, etc.).

- You want advanced features like consolidation and node expiration.

Conclusion

Autoscaling is Kubernetes’ way of making sure your cluster is always “just right.”

- Cluster Autoscaler is the seasoned veteran—dependable, steady, and multi-cloud.

- Karpenter is the rising star—fast, flexible, and laser-focused on efficiency (especially on AWS).

If you’re starting a new project on AWS, Karpenter is quickly becoming the go-to. If you value battle-tested stability across different providers, Cluster Autoscaler is still a safe bet. Either way, once autoscaling is in place, you’ll stop worrying about wasted resources and finally get back to building things that matter. SupportPRO team is here for any help in this regard.

Partner with SupportPRO for 24/7 proactive cloud support that keeps your business secure, scalable, and ahead of the curve.